这个算是我的第一次爬虫经验,虽然很容易就实现了,代码量相当的少,也挺满足了,自己也是从菜鸟慢慢的爬起来的,虽然现在也挺菜的….

爬取的目标是:http://www.budejie.com/

工具:

Linux

python 3

Sublime Text 3

终端

需要用到的模块:

urllib

BeautifulSoup

lxml

urllibpython3里面已经自带了,所以需要下载的只有BeautifulSoup和lxml

安装方法:

pip3 bs4

pip3 lxml

注:BeautifulSoup在bs4下面,所以直接安装bs4

再注:lxml模块在windows下安装是会报错的,Google一下解决方法吧

好了,把东西都全部齐全之后,开始吧!

下面是爬视频的代码:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

| # -*- coding: utf-8 -*-

# 引入模块

import urllib.request

from bs4 import BeautifulSoup

import os

import urllib.error

def Replace(url):

url = url.replace("mvideo","svideo")

url = url.replace("cn","com")

url = url.replace("wpcco","wpd")

return url

time = 1

while True:

url = "http://www.budejie.com/video/"+str(time)

headers = {"User-Agent":"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/54.0.2840.71 Safari/537.36"}

url_Request = urllib.request.Request(url,headers=headers)

try:

url_open = urllib.request.urlopen(url_Request)

except urllib.error.HTTPError as e:

print("页面没有找到",e.code)

url_soup = BeautifulSoup(url_open.read().decode("utf-8"),'lxml')

try:

url_div = url_soup.find("div",class_="j-r-c").find_all("div",class_=" j-video")

url_name = url_soup.find_all("li",class_="j-r-list-tool-l-down f-tar j-down-video j-down-hide ipad-hide")

except AttributeError as f:

print("已经爬完了...这个网站页面不多~不信自己翻一翻")

break

name = [] #用来储存视频的名字

div = [] # 用来储存视频的地址

replace_name = [] # 将视频名字中的一些特殊字符换掉

for i in url_name:

name.append(i["data-text"])

for z in name:

z = z.replace("\"","'")

replace_name.append(z)

for x in url_div:

video_url = Replace(x["data-mp4"])

div.append(video_url)

print("------------------------------------")

print("正在下载第 %s 页" % time)

for z in zip(replace_name,div):

try:

print("Downloading... %s" % z[0])

try:

urllib.request.urlretrieve(z[1],"baisibudeqijie_video//%s.mp4" % z[0])

print("%s Download complite!" % z[0])

except OSError as o:

print("文件出错啦~可能是有特殊符号~也有可能是链接消失啦~这张就下载不了啦~",o)

except UnicodeEncodeError as u:

print("你用的可能是windows系统吧~这里报错“GBK”了~用Linux系统吧~")

print("或者在cmd窗口下输入: chcp 65001 就可以改成'utf-8'了")

except urllib.error.HTTPError as f:

print("图片链接没有找到,可能是被删除了吧~",f.code)

except FileNotFoundError as e:

print("视频链接没有找到,可能被删除了吧~",e)

pass

print("第 %s 页已下载完成" % time)

print("------------------------------------")

time+=1

|

下面是爬取图片的,其实和视频差不多

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

| # -*- coding: utf-8 -*-

import urllib.request

from bs4 import BeautifulSoup

import os

import urllib.error

time = 1

while True:

url = "http://www.budejie.com/pic/"+str(time)

headers = {"User-Agent":"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/54.0.2840.71 Safari/537.36"}

url_Request = urllib.request.Request(url,headers=headers)

try:

url_open = urllib.request.urlopen(url_Request)

except urllib.error.HTTPError as f:

print("页面没有找到",f.code)

url_soup = BeautifulSoup(url_open.read().decode("utf-8"),'lxml')

try:

url_div = url_soup.find("div",class_="j-r-list").find_all("img")

except AttributeError as e:

print("已经爬完了,这个网页的页面不多~不信自己翻一翻")

break

url_img = [] # 图片的地址

url_name = [] # 图片的名字

for i in url_div:

url_img.append(i["data-original"])

url_name.append(i["alt"])

url_reght = [] # 这个网站的图片会有一些不是图片的链接出现,没有后缀的~下载下来也没用,把它去掉

a = [".gif",".png",".jpg"]

for i in url_img:

if i[-4:] in a:

url_reght.append(i)

url_name_split = [] # 因为字符串太长,储存的时候会报错,就截取','前面第一个

for name_split in url_name:

url_name_split.append(name_split.split(","))

print("--------------------------------")

print("正在下载第 %s 页" % time)

for download in zip(url_name_split,url_reght):

try:

print("Download... %s" % download[0][0])

try:

urllib.request.urlretrieve(download[1],"baisibudeqijie_img//%s" % (download[0][0]+download[1][-5:]))

except OSError as o:

print("文件出错啦~可能是有特殊符号~也有可能是链接消失啦~这张就下载不了啦~",o)

print("%s download complite!" % download[0][0])

except UnicodeEncodeError as u:

print("你用的可能是windows系统吧~这里报错“GBK”了~用Linux系统吧~")

print("或者在cmd窗口下输入: chcp 65001 就可以改成'utf-8'了")

except urllib.error.HTTPError as f:

print("图片链接没有找到,可能是被删除了吧~",f.code)

except FileNotFoundError as e:

print("图片链接没有找到,可能是被删除了吧~",e)

pass

print("第 %s 页已经下载完成" % time)

print("--------------------------------")

time+=1

|

好了,爬取 ”百思不得姐“ 的视频和图片就这么多代码,是不是相当简单,如果用requests就更简单了

我们把这两个合起来:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

| # -*- condig: utf-8 -*-

import urllib.request

from bs4 import BeautifulSoup

import os

import urllib.error

def Replace(url):

url = url.replace("mvideo","svideo")

url = url.replace("cn","com")

url = url.replace("wpcco","wpd")

return url

def video():

time = 1

while True:

url = "http://www.budejie.com/video/"+str(time)

headers = {"User-Agent":"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/54.0.2840.71 Safari/537.36"}

url_Request = urllib.request.Request(url,headers=headers)

try:

url_open = urllib.request.urlopen(url_Request)

except urllib.error.HTTPError as e:

print("页面没有找到",e.code)

url_soup = BeautifulSoup(url_open.read().decode("utf-8"),'lxml')

try:

url_div = url_soup.find("div",class_="j-r-c").find_all("div",class_=" j-video")

url_name = url_soup.find_all("li",class_="j-r-list-tool-l-down f-tar j-down-video j-down-hide ipad-hide")

except AttributeError as f:

print("已经爬完了...这个网站页面不多~不信自己翻一翻")

break

name = [] #用来储存视频的名字

div = [] # 用来储存视频的地址

replace_name = [] # 将视频名字中的一些特殊字符换掉

for i in url_name:

name.append(i["data-text"])

for z in name:

z = z.replace("\"","'")

replace_name.append(z)

for x in url_div:

video_url = Replace(x["data-mp4"])

div.append(video_url)

print("------------------------------------")

print("正在下载第 %s 页" % time)

for z in zip(replace_name,div):

try:

print("Downloading... %s" % z[0])

try:

urllib.request.urlretrieve(z[1],"baisibudeqijie_video//%s.mp4" % z[0])

print("%s Download complite!" % z[0])

except OSError as o:

print("文件出错啦~可能是有特殊符号~也有可能是链接消失啦~这张就下载不了啦~",o)

except UnicodeEncodeError as u:

print("你用的可能是windows系统吧~这里报错“GBK”了~用Linux系统吧~")

print("或者在cmd窗口下输入: chcp 65001 就可以改成'utf-8'了")

except urllib.error.HTTPError as f:

print("图片链接没有找到,可能是被删除了吧~",f.code)

except FileNotFoundError as e:

print("视频链接没有找到,可能被删除了吧~",e)

pass

print("第 %s 页已下载完成" % time)

print("------------------------------------")

time+=1

def img():

time = 1

while True:

url = "http://www.budejie.com/pic/"+str(time)

headers = {"User-Agent":"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/54.0.2840.71 Safari/537.36"}

url_Request = urllib.request.Request(url,headers=headers)

try:

url_open = urllib.request.urlopen(url_Request)

except urllib.error.HTTPError as f:

print("页面没有找到",f.code)

url_soup = BeautifulSoup(url_open.read().decode("utf-8"),'lxml')

try:

url_div = url_soup.find("div",class_="j-r-list").find_all("img")

except AttributeError as e:

print("已经爬完了,这个网页的页面不多~不信自己翻一翻")

break

url_img = [] # 图片的地址

url_name = [] # 图片的名字

for i in url_div:

url_img.append(i["data-original"])

url_name.append(i["alt"])

url_reght = [] # 这个网站的图片会有一些不是图片的链接出现,没有后缀的~下载下来也没用,把它去掉

a = [".gif",".png",".jpg"]

for i in url_img:

if i[-4:] in a:

url_reght.append(i)

url_name_split = [] # 因为字符串太长,储存的时候会报错,就截取','前面第一个

for name_split in url_name:

url_name_split.append(name_split.split(","))

print("--------------------------------")

print("正在下载第 %s 页" % time)

for download in zip(url_name_split,url_reght):

try:

print("Download... %s" % download[0][0])

try:

urllib.request.urlretrieve(download[1],"baisibudeqijie_img//%s" % (download[0][0]+download[1][-5:]))

except OSError as o:

print("文件出错啦~可能是有特殊符号~也有可能是链接消失啦~这张就下载不了啦~",o)

print("%s download complite!" % download[0][0])

except UnicodeEncodeError as u:

print("你用的可能是windows系统吧~这里报错“GBK”了~用Linux系统吧~")

print("或者在cmd窗口下输入: chcp 65001 就可以改成'utf-8'了")

except urllib.error.HTTPError as f:

print("图片链接没有找到,可能是被删除了吧~",f.code)

except FileNotFoundError as e:

print("图片链接没有找到,可能是被删除了吧~",e)

pass

print("第 %s 页已经下载完成" % time)

print("--------------------------------")

time+=1

print("--------------------------------")

print("| 1.video |")

print("| 2.img |")

print("--------------------------------")

user = input("请选择1 or 2: ")

if user == "1":

print("正在获取页面...")

try:

os.mkdir("baisibudeqijie_video")

if os.path.exists("baisibudeqijie_video"):

os.rmdir("baisibudeqijie_video")

os.mkdir("baisibudeqijie_video")

except FileExistsError:

pass

video()

elif user == "2":

print("正在获取页面...")

try:

os.mkdir("baisibudeqijie_img")

if os.path.exists("baisibudeqijie_img"):

os.rmdir("baisibudeqijie_img")

os.mkdir("baisibudeqijie_img")

except FileExistsError:

pass

img()

else:

print("抱歉,选项里没有 '%s'" % user)

|

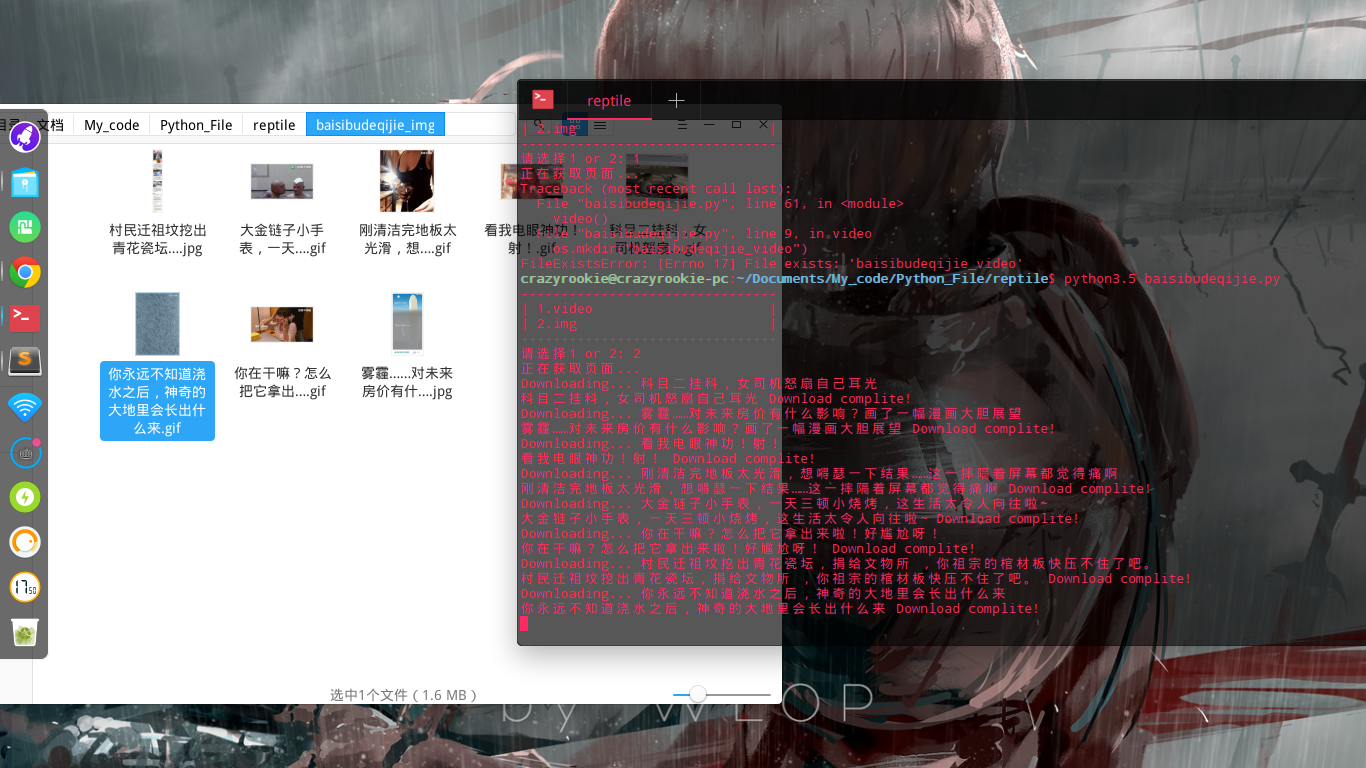

运行结果:

视频的运行结果是一样的~

后来我索性把这个网站的所以东西都爬了~包括音乐~段子~美女,这个是 源代码,参考参考吧~